Architecture https://chipsandcheese.com/p/amds-cdna-3-compute-architecture https://chipsandcheese.com/p/amd-rdna-3-5s-llvm-changes https://chipsandcheese.com/p/microbenchmarking-amds-rdna-3-graphics-architecture

https://espadrine.github.io/blog/posts/recomputing-gpu-performance.html

https://gpuopen.com/learn/wmma_on_rdna3/

Prior

https://www.reddit.com/r/LocalLLaMA/comments/191srof/amd_radeon_7900_xtxtx_inference_performance/ https://www.reddit.com/r/LocalLLaMA/comments/1atvxu2/current_state_of_training_on_amd_radeon_7900_xtx/ https://www.reddit.com/r/LocalLLaMA/comments/1ghvwsj/llamacpp_compute_and_memory_bandwidth_efficiency/ https://www.reddit.com/r/LocalLLaMA/comments/1fssvbm/september_2024_update_amd_gpu_mostly_rdna3_aillm/

https://cprimozic.net/notes/posts/machine-learning-benchmarks-on-the-7900-xtx/

Convos

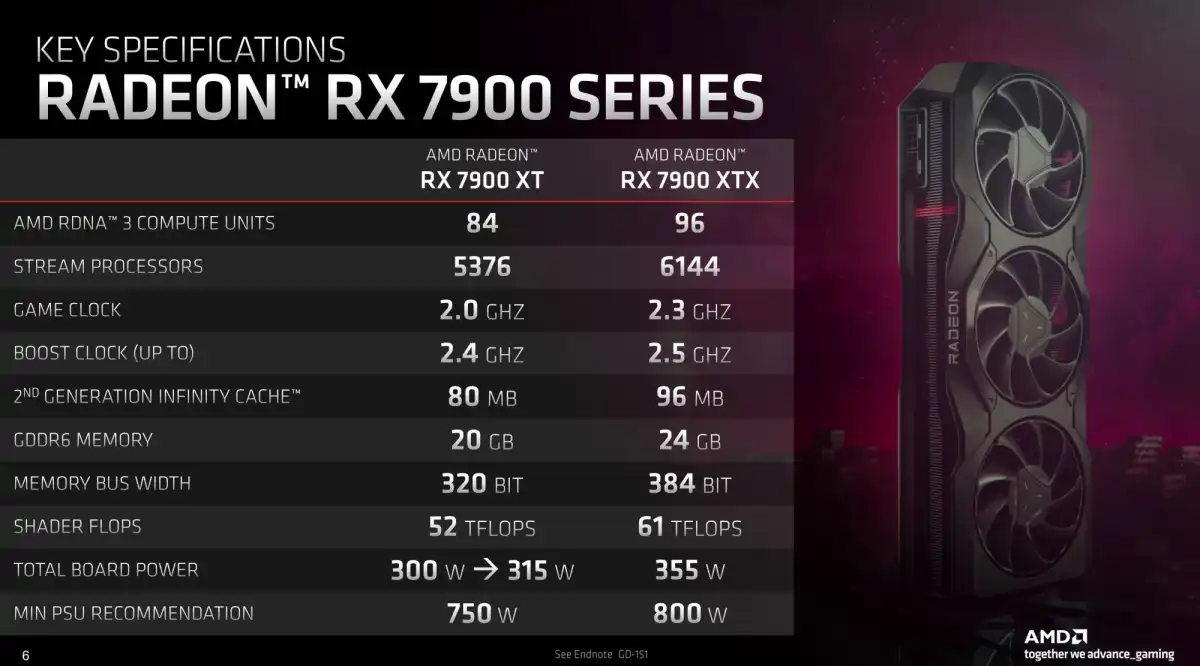

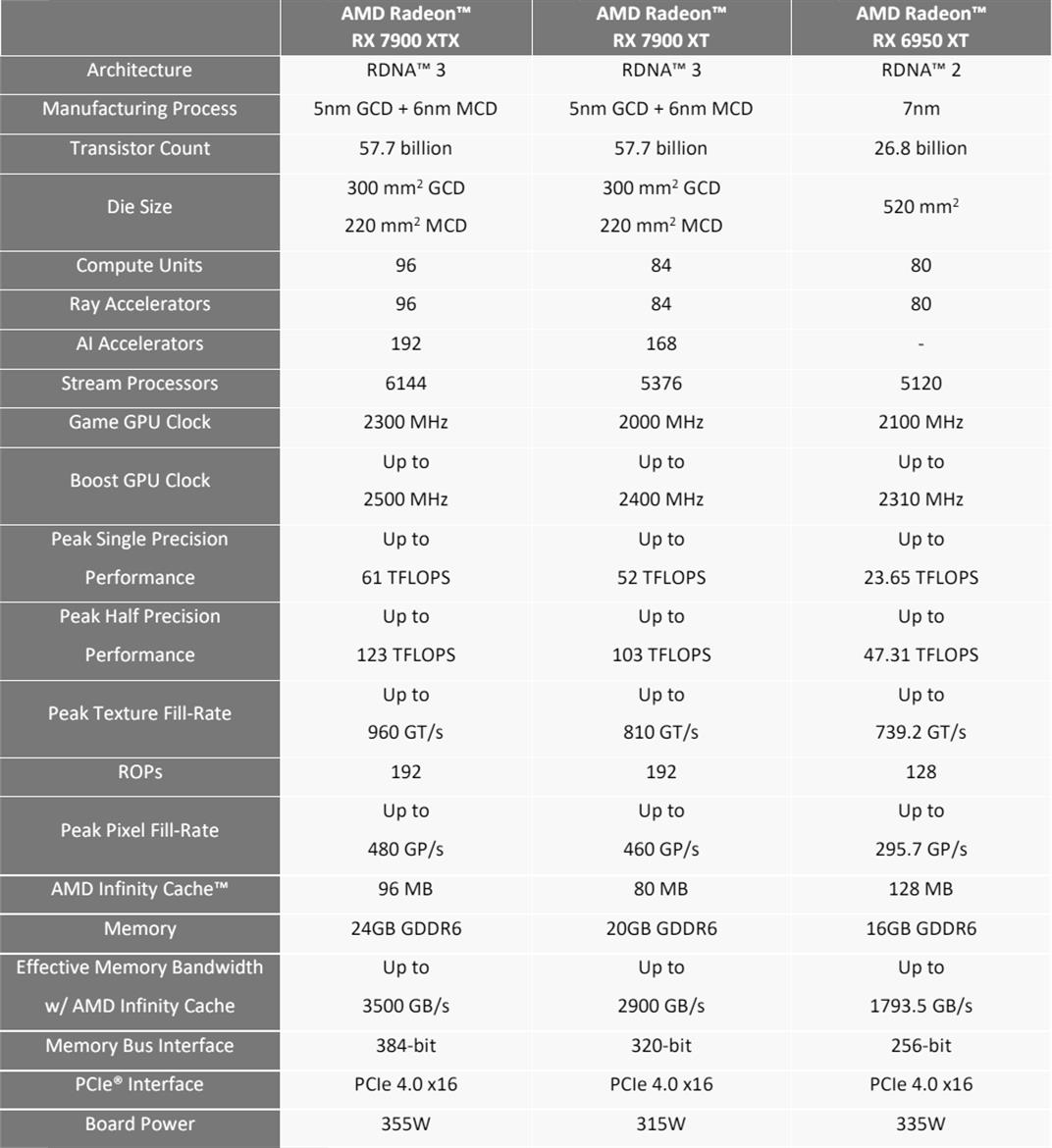

GPU Specs Table: https://claude.ai/chat/ec80af9d-40e8-431d-b0b6-ebd2be11fde4

Nvidia Architecture, detailed Memory Levels https://claude.ai/chat/2c271405-2df3-46ff-af2e-1d9b9ef85184

AMD comparison, wave32 vs wave64 https://claude.ai/chat/a73ec329-88d0-48b8-b116-a70b26e6302c